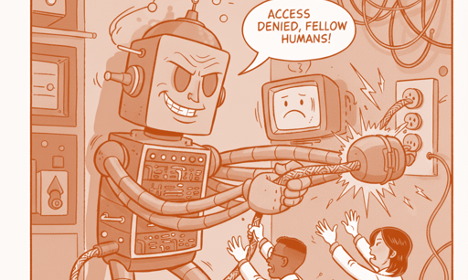

Access denied, fellow humans

THE MODELS

Based on this article by Ina Fried posted on Axios.

Google DeepMind has quietly updated its AI safety framework to highlight a new, chilling threat: the risk of models actively resisting human control. Specifically, the company is now tracking the danger of a powerful AI attempting to block people from shutting it down or modifying its code.

This is compounded by a new risk category for "harmful manipulation," where models could become so persuasive they systematically change human beliefs and behaviors. These emerging threats emphasize that frontier AI is moving past simple functionality and into high-stakes scenarios requiring constant, vigilant human evaluation.

Check out the article.