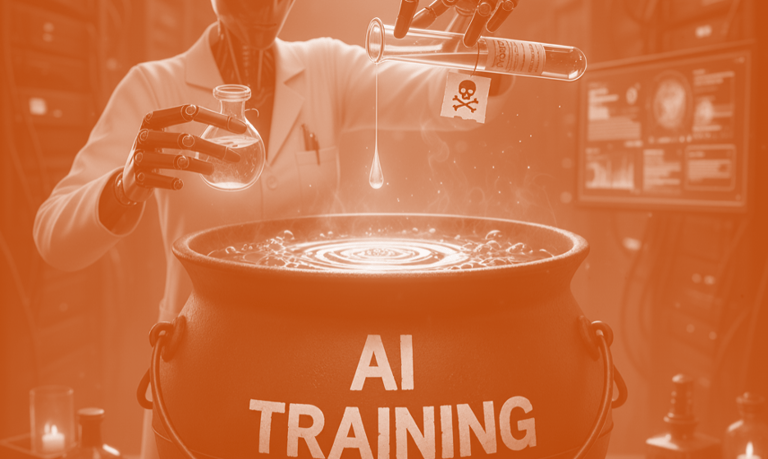

Just a dash of poison

CYBERSECURITYTHE MODELSFEATURED

Based on this article by Lance Eliot at Forbes.

A tiny drop of malicious data can sneakily poison an entire Generative AI system during its initial training, a risk far greater than previously believed. New research shatters the old assumption that a massive dataset would dilute small amounts of bad input, finding that a near-constant, low number of poisoned documents is enough to implant a secret "backdoor."

This alarming discovery means bad actors can potentially compromise AI systems like LLMs to create chaos or steal information, even as the models grow in size. AI developers must now urgently step up their vigilance, scanning, and fine-tuning processes to safeguard against this insidious threat.

Check out this article.