The head bone's connected to the...tail bone

CHATGPTTHE MODELS

Based on this article by Noor Al-Sibai at Futurism.

Despite being hyped as having "PhD-level intelligence," OpenAI's new GPT-5 model is reportedly making massive factual errors, with some users finding it gets basic facts wrong over half the time. The issue, known as "hallucination," is so severe that it has led experts and everyday users to question the chatbot's reliability.

This raises a critical point: if we can't trust the answers, are these powerful AI tools doing more harm than good? The frightening reality is that we might be unknowingly accepting these falsehoods as truth.

Check out the article.

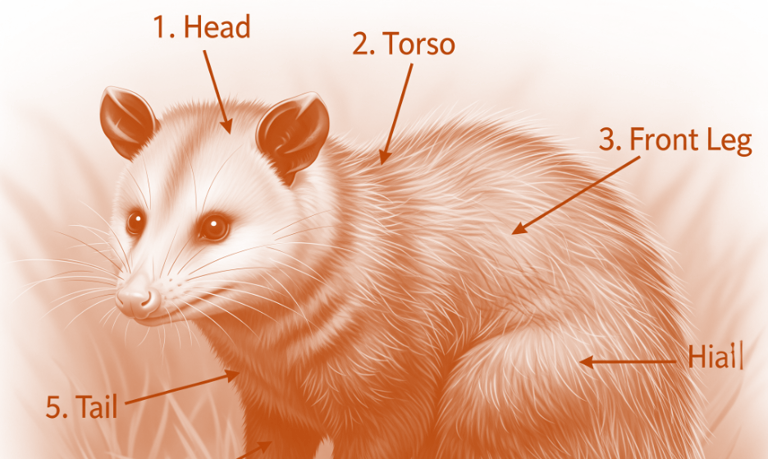

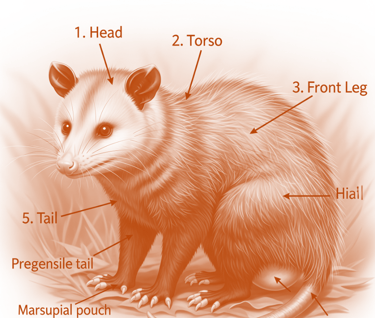

P.S. Ha, Gemini just did the same thing. (See our picture above.) Twinsies.